Pegah Salehi successfully defended her thesis

Published:

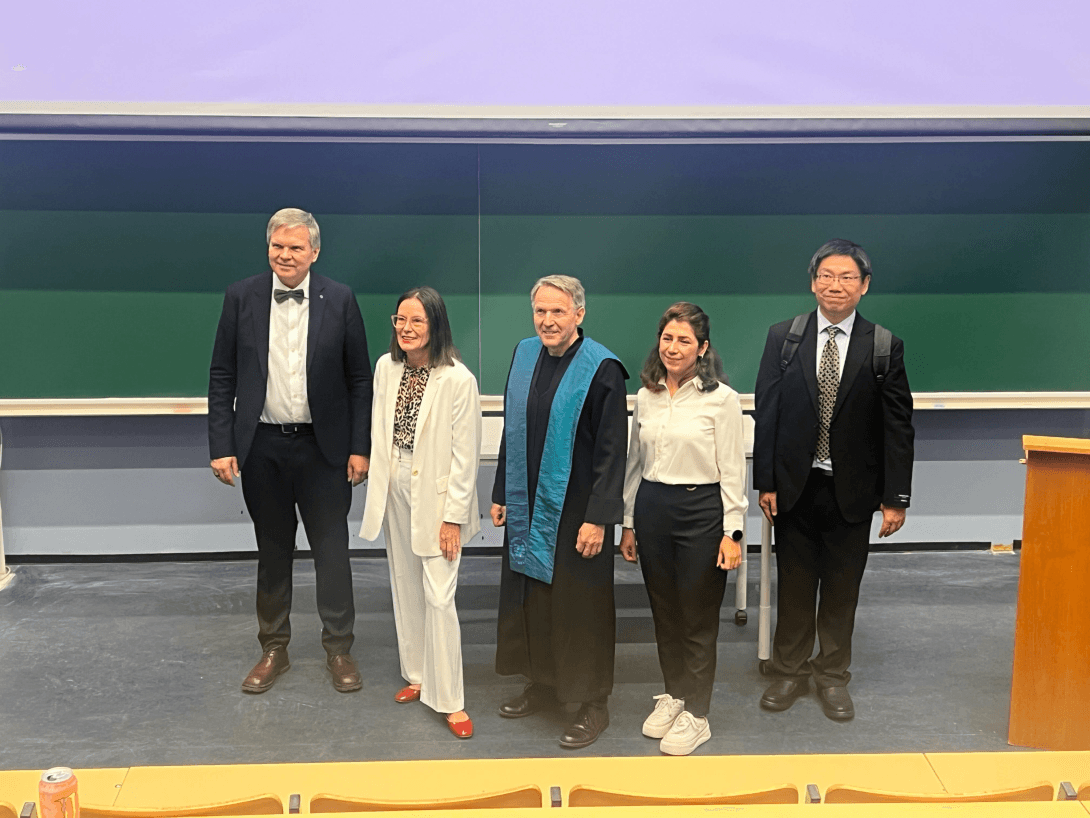

Congratulations to Pegah Salehi, who successfully defended her thesis "Visual Realism in AI-Driven Virtual Training Environments for Child Investigative Interviews".

Supervisory Committee:

- Professor Dag Johansen, IFI, UiT (Main Supervisor)

- Professor Pål Halvorsen, IFI, SimulaMet

- Professor Michael Riegler, IFI SimulaMet/UiT

- PostDoc Saeed Shafiee Sabet, SimulaMet

- Researcher Varjira Thambawita, SimulaMet

Evaluation Committee:

- 1st Opponent: Professor Cheng-Hsin Hsu, Department of Computer Science, National Tsing Hua University, Taiwan

- 2nd Opponent: Professor Letizia Jaccheri, NTNU

- Chair of the committee: Professor Gunnar Hartvigsen, IFI, UiT

Abstract

The imperative to protect children from abuse underscores the critical need for highly skilled professionals capable of conducting sensitive investigative interviews. These interviews are often pivotal in legal and welfare responses, particularly as a child’s testimony can be the primary source of evidence. Effective training for such professionals requires solutions that are not only scalable and dynamic but also address the visual dimension of simulated experiences, which plays a foundational, yet complex, role. Traditional training methodologies, however, frequently encounter limitations in providing interactive and visually convincing practice environments, thereby restricting opportunities for comprehensive skill refinement. This doctoral research confronts these pressing challenges by systematically investigating how artificial intelligence (AI) can be employed to develop advanced avatar-based systems. The core of this investigation lies in understanding and optimizing the visual components of these systems to create impactful and accessible training tools for this sensitive field. Motivated by these existing limitations in interviewer training, this research aims to answer the central research question: How can AI be used to develop a visually realistic avatar-based system for investigative interview training with children? To comprehensively answer this question, the thesis delineates five principal research objectives. These include: identifying the key challenges inherent in the development of child avatar systems for investigative training; investigating how varying degrees of visual realism in these avatars impact the learning experience and user acceptance; conducting a comparative analysis of 2D versus 3D virtual environments to determine their effectiveness for training child protective service (CPS) professionals; developing and refining talkinghead generation models through enhanced Audio Feature Extraction (AFE) to optimize technical performance and ensure scalability for practical applications; and examining the benefits of emotionally expressive AI-driven child avatars, along with the feasibility of implementing dynamic facial emotion expression capabilities. Employing a Design Science Research Methodology (DSRM), this research iteratively designed, developed, demonstrated, and rigorously evaluated an AI-driven child avatar system. Key contributions include a multimodal AI system that integrates Natural Language Processing (NLP), speech-to-text (STT), text-to-speech (TTS), and advanced visual avatar generation techniques to simulate authentic and interactive child responses with a focus on visual fidelity. Empirical findings revealed that while photorealistic avatars generally enhance perceived visual authenticity, stylized avatars were often preferred in conversational contexts to mitigate the ”uncanny valley” effect. Comparative evaluations demonstrated that 3D virtual reality (VR) environments significantly boost visual immersion and emotional resonance, whereas 2D environments offer superior usability and comfort for extended training. Significant technical advancements in AFE for visually synchronized talking-head models drastically reduced latency and improved audio-visual coherence. Furthermore, the integration of emotionally expressive avatars, enabled through technologies such as NVIDIA Omniverse Audio2Face and Unreal Engine, was explored as a means to improve trainees’ ability to interpret subtle psychosocial cues. Preliminary findings indicated promising perceptual effects, while also highlighting the key role of audiovisual congruence and the technical challenges involved in achieving emotionally believable avatar interactions. Collectively, this dissertation establishes a foundational framework for developing more accessible, visually engaging, and ultimately more effective training tools. By advancing the understanding and application of visual realism and interactive capabilities of AI avatars, this research endeavors to improve the skills and preparedness of professionals, thereby enhancing the quality of child testimonies, minimizing trauma during investigative processes, and contributing positively to child welfare outcomes.